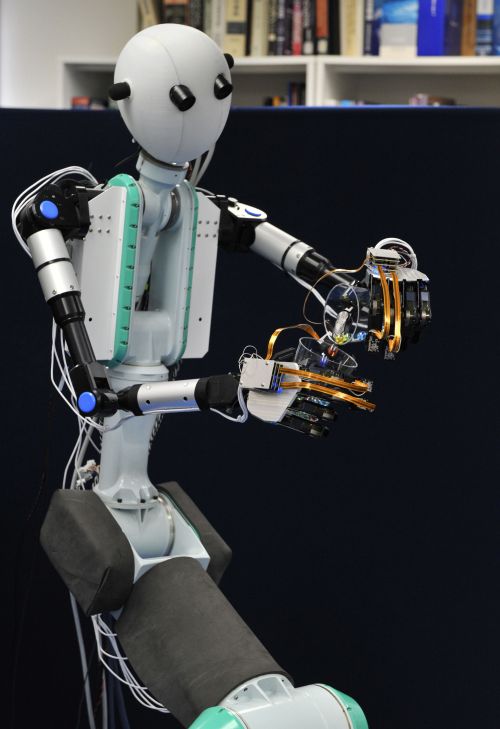

A Japanese-developed robot that mimics the movements of its human controller is bringing the Hollywood blockbuster “Avatar” one step closer to reality.

Users of the TELESAR V don special equipment that allows them not only to direct the actions of a remote machine, but also to see, hear and feel the same things as their doppelganger android.

“When I put on the devices and move my body, I see my hands having turned into the robot hands. When I move my head, I get a different view from the one I had before,” said researcher Sho Kamuro.

“It’s a strange experience that makes you wonder if you’ve really become a robot,” he told AFP.

Professor Susumu Tachi, who specializes in engineering and virtual reality at Keio University’s Graduate School of Media Design, said systems attached to the operator’s headgear, vest and gloves send detailed instructions to the robot, which then mimics the user’s every move.

At the same time, an array of sensors on the android relays a stream of information which is converted into sensations for the user.

The thin polyester gloves the operator wears are lined with semiconductors and tiny motors to allow the user to “feel” what the mechanical hands are touching -- a smooth or a bumpy surface as well as heat and cold.

The robot’s “eyes” are actually cameras capturing images that appear on tiny video screens in front of the user’s eyes, allowing them to see in three dimensions.

Microphones on the robot pick up sounds, while its speakers allow the operator to make his voice heard by those near the machine.

The TELESAR -- TELexistence Surrogate Anthropomorphic Robot -- is still a far cry from the futuristic creations of James Cameron’s “Avatar,” where U.S. soldiers are able to remotely control the genetically engineered bodies of an extra-terrestrial race they wish to subdue.

But, says Tachi, it could have much more immediate -- and benign -- applications, such as working in high-risk environments, for example the inside of Japan’s crippled Fukushima nuclear plant, though it is early days.

“I think further research and development could enable this to go into areas too dangerous for humans and do jobs that require human skills,” he said.

Japan’s famously advanced robot technology was found wanting during the crisis at Fukushima, where foreign expertise had to be called on for the machines that went inside reactor buildings as nuclear meltdowns began.

Tachi said a “safety myth” had grown up around atomic technology, preventing research on the kind of machines that could help in the wake of a disaster.

But he said his kind of robot technology could help with the long and difficult task of decommissioning reactors at Fukushima -- a process that could take three decades.

A remote-controlled android that allows its user to experience what is happening far away may have more than just industrial applications, he added.

“This could be used to talk with your grandpa or grandma living in a remote place and deepen communications,” he said. (AFP)

<한글 기사>

日 ‘아바타 로봇’ 개발

인간과 로봇이 동작뿐 아니라 시각, 청각, 촉각까지 공유할 수 있는 기술이 일본에서 개발되었다.

AFP통신은 일본 게이오 대학 츠츠무 타치 교수가 이끄는 연구팀이 개발한 기술이 원격조종을 통해 로봇의 움직임을 조종하면서 로봇이 “보고, 듣고, 느끼는” 모든 것을 조종자가 같이 느낄 수 있게 해준다고 10일 보도했다.

TELESAR (TELexistence Surrogate Anthropomorphic Robot: 원격-현존 의인화 대리 로봇) 라고 명명된 이 로봇은 조종자가 헤드기어, 조끼, 장갑을 끼고 움직이면 부착장비 내 설치된 장치를 이용해 로봇이 작은 동작까지 그대로 재연하게 해준다.

반대로 로봇이 “느끼는” 촉감은 로봇에 부착된 센서를 통해 전달되는데, 조종사는 장치 내에 설치된 작은 모터들을 통해 열기나 온기, 혹은 표면이 매끄럽거나 거친지 여부를 느낄 수 있고. 또한 로봇에 부착된 마이크와 카메라를 통해 소리와 3차원 영상을 전송 받을 수 있다.

연구원 쇼 카무라는 이 장치를 사용한 후 “내 손이 로봇 손으로 변한 것 같았고, 전과 전혀 다른 시야를 갖게 되었다”면서 자신이 로봇이 된 것 같은 느낌을 받았다고 전했다.

타치 교수는 이 기술을 이용하면 작년 지진과 쓰나미로 파손된 후쿠시마 원자력 발전소와 같은 위험한 환경 속에서 안전하게 업무를 처리할 수 있다고 설명했다.

그는 원자력 기술이 안전하다는 “신화”가 참사가 일어났을 때 대응할 수 있는 방안을 개발하는 것을 저지해왔다고 주장하면서, 이러한 로봇 기술이 후쿠시마 발전소를 해체하는 길고 고된 작업에 큰 도움이 될 것이라고 말했다.

교수는 이러한 용도 외에 사용자가 멀리 있는 사람과 로봇을 이용한 간접만남을 가질 수도 있다고 설명했다.

![[Weekender] How DDP emerged as an icon of Seoul](http://res.heraldm.com/phpwas/restmb_idxmake.php?idx=644&simg=/content/image/2024/04/25/20240425050915_0.jpg&u=)

![[Today’s K-pop] NewJeans' single teasers release amid intrigue](http://res.heraldm.com/phpwas/restmb_idxmake.php?idx=644&simg=/content/image/2024/04/26/20240426050575_0.jpg&u=)

![[Herald Interview] Mistakes turn into blessings in street performance, director says](http://res.heraldm.com/phpwas/restmb_idxmake.php?idx=652&simg=/content/image/2024/04/28/20240428050150_0.jpg&u=)